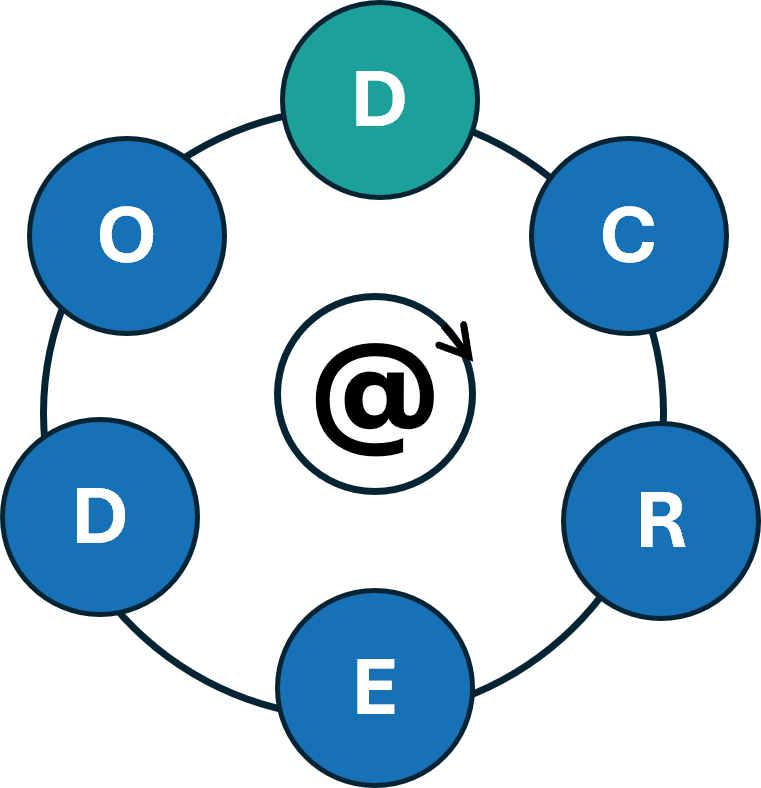

The D-CREDO Erasmus+ project started in September 2024 under the coordination of Jaggiellonian University and aims to enhance the skills of health professions students and their educators in using digital health tools in clinical reasoning. By teaching about the use of these tools in practice and education of clinical reasoning, the project seeks to promote a new generation of healthcare professionals capable of effectively using digital tools and critically reflecting on their practices to improve the quality of care they deliver. In parallel, the project aims to boost educators’ confidence and competence in incorporating these digital resources into their clinical reasoning curricula. You can find more information on the project’s website:

Clinical Reasoning Curricula in Health Professions Education: A Scoping Review

Started during the DID-ACT project by a group of researchers the article “Clinical Reasoning Curricula in Health Professions Education: A Scoping Review” has been published recently in the Journal of Medical Education and Curricular Development. Congratulations to the authors and members of the DID-ACT team Maria Elvén, Elisabet Welin, Desiree Wiegleb Edström, Tadej Petreski, Magdalena Szopa, Steve Durning, and Samuel Edelbring! The fulltext is available here:

https://doi.org/10.1177/23821205231209093

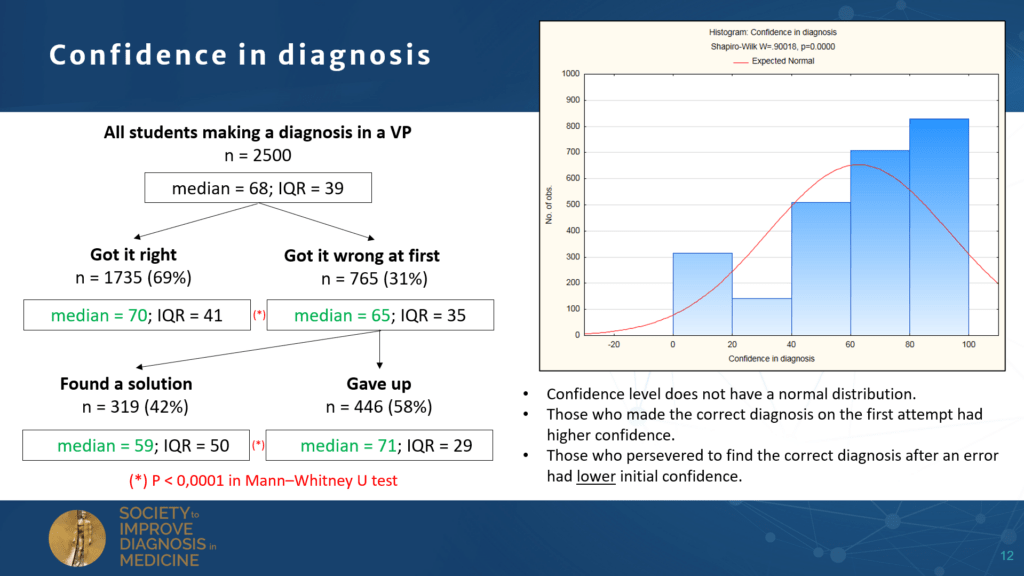

DID-ACT at the SIDM conference

The Society to Improve Diagnosis in Medicine (SIDM) held the European edition of its conference in Utrecht, the Netherlands, July 2-4, 2023. The theme of this year’s conference was “The Future of Diagnosis – Navigation in Uncertainty”. There were many presentations on the role of recent developments in artificial intelligence and its influence on the diagnostic process, patient involvement in diagnosis and their right to choose not to know parts of the diagnosis. The DID-ACT and iCoViP projects presented their findings on the design and evaluation of educational resources in the context of uncertainty in the clinical reasoning process.

Education in the handling of uncertainty, as addressed by the projects, will contribute to preparing health professionals to better deal with diagnostic challenges in the years to come.

DID-ACT – to be continued…

The DID-ACT project officially ended at the end of 2022, so we are now wrapping up everything and starting to implement our longterm sustainability plans.

Especially, we hope to be able to continue our wonderful collaboration and expand our network in the future.

Please drop us a note if you are interested in joining – we are continuing to meet online on a regular basis and you are more than welcome to join us.

We will also continue to post news on this website and also on our LinkedIn page, so stay tuned to learn more about upcoming events

and updates to the DID-ACT curriculum.

To help you getting started in using the DID-ACT resources, we have compiled a pdf leaflet,

which you can download from the website.

A big Thank You to all partners, associate partners, and all others who supported this project and contributed their time in multiple ways – without you this project could not have been implemented!

External Curriculum Review Results

From October 18th to November 30th 2022, we gathered data from a survey set out to evaluate various aspects of the DID-ACT clinical reasoning curriculum. These aspects included personal data, objectives, technology, impact, learning activities, and assessment/feedback.

A total of 32 reviewers participated in this External Quality Review. The survey was based on quantitative questions, however, reviewers were also asked to add comments in free-text boxes.

Overall, the feedback received was very positive. The majority of participants evaluated the objectives and expectations of the curriculum as being clearly stated and that sufficient instructions were provided to work with and through the learning units. Most participants were able to navigate through the website and viewed the technology and media provided as playing a supporting role to the learning units. A large number of respondents (n=19) agreed that these learning units will improve students’/learners’ clinical reasoning abilities. The same number of respondents also agreed that the learning units will improve the way educators teach clinical reasoning. The learning activities were seen as aiding in engaging in and achieving the objectives set forth in the learning units. The different forms of assessment and feedback were considered appropriate and allowed learners to assess their progress.

Some respondents provided comments with valuable feedback, such as

- Some reviewers found the interface a bit “old- fashioned” and would have preferred a more convenient, modern interface, but one also mentioned that they did not experience any technical issues and still managed to follow the learning units with interest.

- Reviewers found the platform to be interesting, and the virtual patients, which are part of the curriculum “entertaining”, which indicates that these educational resources are encouraged learner interaction.

- Other reviewers commented on the excellent quality of the work created, and how important and novel the curriculum is to fill the gap of clinical reasoning knowledge in medical education. They also appealed to the importance of disseminating the curriculum, to both students and educators alike.

- Comments about the objectives included that they were clear and indicated that there would be no issues in understanding the process of the learning units.

Overall, we feel that we have gathered very positive feedback from our external reviewers in this survey, and are happy with its outcome. With all the reviews and feedback received, we will continue improving and sustaining the work that has been done in the DID-ACT project so far.

Piloting and evaluation of refined DID-ACT learning units at EDU

In fall 2022, we decided to pilot additional four learning units at EDU to test them after the refinement process, which occurred earlier in the year. We piloted the following learning units (see descriptions) and obtained qualitative feedback from facilitators and students.

Student Curriculum – Person Centred Approach to clinical reasoning

This learning unit was piloted in 2021, however, we wanted to re-evaluate it to assess the refinements with learners and facilitators. The learning unit was taught by the same facilitator as 2021 saw. After the session, the facilitator expressed that the structure of the session was more fluent and students were more happy to engange in the session. Moreover, students thought the real life example of a person-centred perspective had a real positive impact on their learning.

Student Curriculum – Ethical aspects – patient management and treatment

This learning unit was piloted was previously not piloted by any partner. Overall, both the facilitator and the learners found the learning unit useful and interesting, and commented that the interactive activities and case examples were very useful in delivering the content. Minor suggestions for improvements were recommended.

Train the Trainer – Information gathering, generating differential diagnoses, decision making and treatment planning

This learning unit was previously piloted by partners from Maribor, Bern, and Krakow, but not EDU. After the session and comparable to the earlier pilots, we received positive feedback from participants regarding the content and structure of the learning unit. Also, the need for such teaching was made clearer.

Train the Trainer – Discussing and teaching about cognitive errors and biases.

This learning unit was previously not piloted by partners. Again, the structure and content of the learning unit were well received, and especially the fishbone diagram and wiki of this learning unit were appreicated by participants.

Overall, the additional pilots indicated that the refinements earlier on in the year were successful in improving the created DID-ACT curriculum and we are happy with the results we achieved. Prior to implement a learning unit, we recommend familiarizing oneself with the DID-ACT learning platform (Moodle), so teaching can run more smoothly. The additional pilots also reaffirm what we saw in our needs analysis, that there is a need for both teachers and learners to learn about clinical reasoning, and we hope that our curriculum can start filling this needs gap.

Call for Feedback

During November, we are carrying out external review rounds of our student and train-the-trainer learning units. We hope for valuable feedback on the structure, content, and learning & assessment activities to further improve our DID-ACT curriculum.

Interested to provide feedback?

Please, log into our Moodle platform (link) – if you do not yet have an account you can easily create one. Then, choose any theme or learning unit you are interested in and please provide us with your feedback either by filling out a short questionnaire or leaving a comment to this post.

Inspiring face to face DID-ACT Project meeting in Kraków, Poland

On September 26th and 27th 2022, our Polish project partners hosted our final face to face project meeting in the beautiful city of Kraków, Poland.

There were feelings of melancholy since we all really enjoy working together on this project which is soon coming to an end. However, there was also optimism for future collaborations, along with the remaining work and the online meetings.

In Kraków we had many inspiring discussions relating to the sustainability plans for the project, along with discussions about dissemination and our long-term integration plans.

On the first day, we presented the different approaches to integrating the clinical reasoning curricula at our institutions, including some discussion about the bottom up and top down integration approaches, and the pros and cons of each. The results and outcomes of the project were also discussed, as well as some specifics about the finalization of the project. On the second day, we focused on the sustainability plans and further research to be published, and agreed on some dissemination activities.

We all learned a lot from each other’s fruitful ideas and the team spirit was strong!

DID-ACT at AMEE 2022 in Lyon, France

AMEE 2022, with more than 2400 international attendee, was big this year for the DID-ACT project! We were happily surprised by the interest shown to the project in our presentations and E-poster over the days, but we were especially surprised by the pre-conference workshop on “Teaching clinical reasoning toolkit”, during which we had more attendees (from all over the world) than registered. At the beginning of this workshop we introduced participants into the DID-ACT curriculum, which was followed by a small group work on three selected learning units, which participants should discuss in their group and develop ideas for integrating them into their curriculum.

Another highlight was the E-Poster from one of our medical students, Melina Körner (University of Augsburg), who presented the two videos she created together with Ada Frankowska from Kraków and Elisa Schneider from Augsburg. The videos are designed to support educators in selecting clinical reasoning teaching methods.

Overall, we received a lot of positive feedback on the DID-ACT curriculum and the integration guide, and educators were particularly happy about the fact that the material is under a creative commons license, and can therefore be used freely in clinical reasoning education.

The feedback we received at the AMEE 2022 will be included in the final work on the integration guideline and also into our sustainability model.

DID-ACT at the hybrid Medical Education Forum in Kraków on Sep 28-29th 2022

We are excited to announce that members of DID-ACT will be presenting research from the project alongside other great work at the Medical Education Forum (MEF) 2022 from the 28th-29th of September! DID-ACT partners from Poland, Germany, and the US will be present. The MEF program includes the following topics and slots related to clinical reasoning research:

| Topic | Date | Presenter |

|---|---|---|

| Teaching clinical reasoning: four key lessons from the literature | 28/9/22 11:40-12:00 | Steven Durning, Uniformed Services University of the Health Sciences, Bethesda, USA |

| Building a European collection of virtual patients for clinical reasoning training within the iCoViP project | 28/9/22 12:00–12:20 | Inga Hege University of Augsburg, Germany |

| Developing, implementing, and disseminating an adaptive clinical reasoning curriculum for healthcare students and educators: the outcomes of a European project | 28/9/22 12:20-12:40 | Andrzej A. Kononowicz, Jagiellonian University, Poland |

| Workshop: Tips and tricks on how to teach clinical reasoning effectively supported by virtual patients | 29/9/22 11:30-13:45 | Inga Hege, Małgorzata Sudacka, Andrzej A. Kononowicz, Steven Durning |

For more information regarding the program that includes a whole session about clinical reasoning, please see the program.

For more information regarding registration, please go to registration

We would be very excited to see you in person or virtually at the MEF2022 and talk about clinical reasoning in healthcare education!