After we had tested the train-the-trainer courses of the DID-ACT curriculum, it was time to evaluate the quality of learning units for students. We have conducted a series of pilot studies that validated five different learning units in eight evaluation events across all partner institutions including also associate partners. We have recorded student activities in the virtual patient collection connected with the DID-ACT curriculum available for deliberate practice. In addition, we evaluated the usability of the project’s learning management system in several test scenarios.

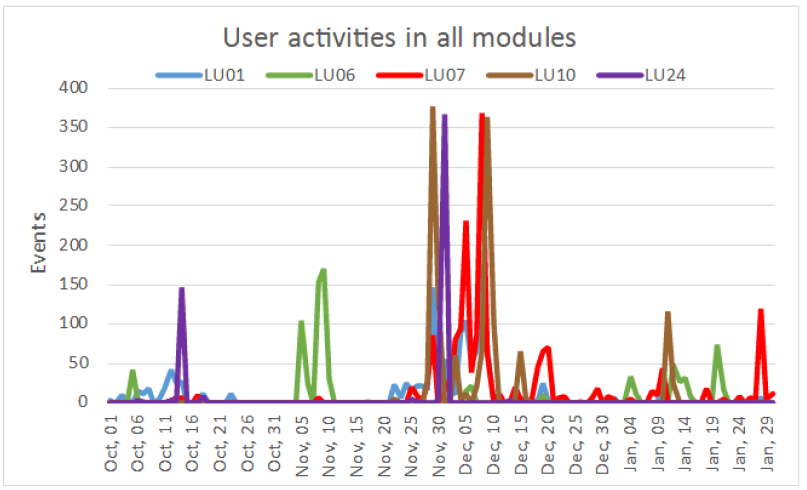

Overview about student activities in piloted learning units

Overall, students agreed to a large extent that the piloted DID-ACT learning units improved their clinical reasoning skills (average 5.75 in 7-point Likert scale). As a special strength of

the curriculum students frequently named the benefit of virtual patients integrated with the learning units. Another highlight were small-group group discussions, often conducted in multinational teams which broadened their views on clinical reasoning. However, a challenge in the tested version of the curriculum implementation was navigation in the learning management system (Moodle). As a consequence, we have further analyzed these data and, furthermore, conducted a series of usability tests. These analyses and tests led to a process to address the issues wherever it is possible. We have also received several requests for modifications of the developed learning material that we will address in the next deliverable, in which we refine courses based on pilot implementation.

You can find the full report, that was published iend of March 2022 here: Evaluation and analysis of the pilot implementations of the student curriculum